Very interesting, even on my first try.

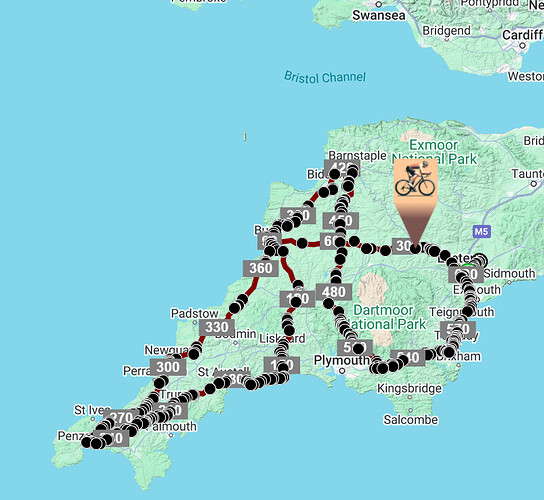

Just for info, I loaded a hilly 600km ride (using a gpx) I helped organise in SW England last summer. All our planning was on RwGPS, though I did check it on OpenRunner.

Distance within 300m however climb showed over 3000m more (11714 versus 8432). Riding it (Garmin, with its errors) gives a little under 9000m.

Does this process work to calculate the CdA for just the bike, with no rider?

Yep, that’s what I did for some of my initial testing. I can run it to generate the CdA for any 3D object, and I have totally compared some of the bikes I have lying around, and my friends bikes.

Is your validation that your code works just that your point cloud model looks better than another point cloud model and in images on their website? And they say they can hit 2%?

Have you run standard validation geometry to see if what you scan/process/calculate matches known coefficients?

My validation is every test I’ve performed has been within expectations/easily within single digit percents of known difference between rim depth, tire size, positions, some correlated against power numbers/speed determined through pass efforts at a nearby velodrome.

Current revenue from this beta at/around the $30 price point (if I start to get volume above my and my teammates capabilities to handle before scaling, I may jump the price to $50), is allocated specifically toward conducting reference tests in a Wind Tunnel, and publishing the results/white papers/etc.

Additionally, I had only recently discovered that company’s existence and made an offhand comment, I do not know how they estimated their accuracy, and I will not state I’m within 2% until I have repeatable, Wind Tunnel tested results published in a whitepaper.

Currently, it seems to track closely with expectations and we have an expert on board with experience in Wind Tunnels with cyclists, and from his perspective, it’s already far beyond many of the other at home methods, as an example, simply cutting away a few frontel wires on a model, drops the amount of watts needed to maintain 22.3mph with 7.5 degree yaw by .29 watts.

That seems like OpenFOAM, have used it myself to do my own testing. I do CFD modeling as part of my day job, but not for bicycling. You can easily modify the number of decimals in ParaView. OpenFOAM is perhaps the most underrated engineering software out there. Wish I had more time to play with it (I am in corporate management these days).

Love the service that you are offering, will definitely give it a go.

Very kind of you to say that, it is OpenFoam, and I just went into the force coefficient function log files “forceCoeffs.dat” and counted the precision, I wasn’t sure if that was precisely what was being brought up, but assumed it could be.

Yeah, that software has a learning curve, and I’m having sooo much fun playing with it.

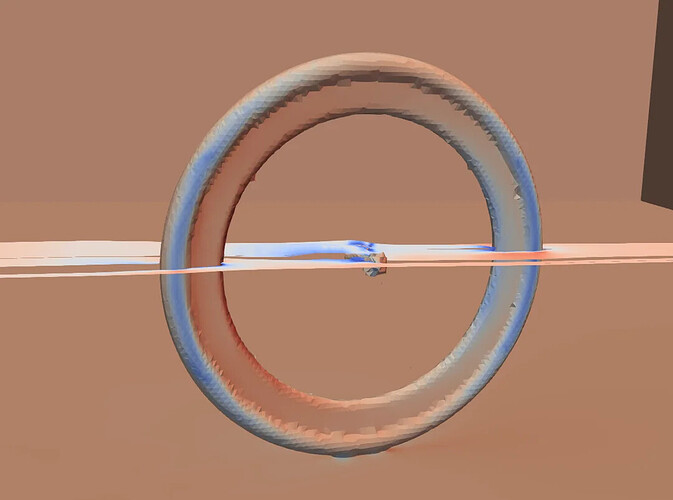

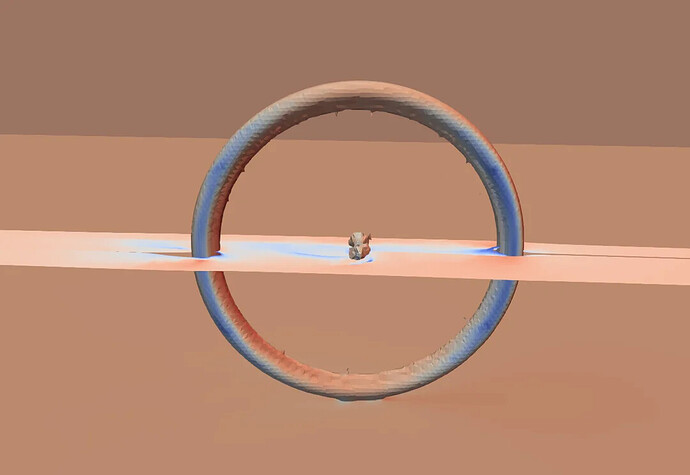

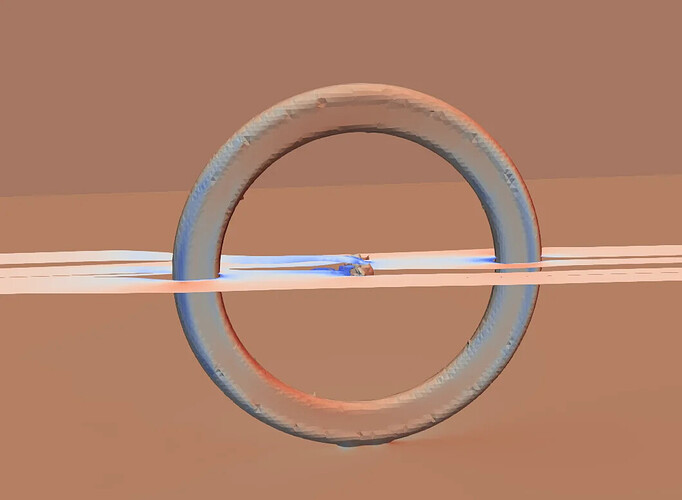

As a gravel cyclist, I heard if the tire width was too much wider than deep rims the flow would separate and it wouldn’t have been worthwhile to run said deep rims, for the extra weight and instability issues, and, that appears to be the case:

28mm wide 60mm deep rim 45mm tire

28mm wide 17mm deep rim 45mm tire

45mm wide 60mm deep rim, 45mm tire

The results are:

The 28 mm rim width, 17 mm depth setup produces about 19.64 W of aerodynamic drag power.

The 28 mm width rim, 60 mm depth setup produces about 18.98 W.

The 45 mm width rim, 60 mm depth setup produces about 16.54 W.

Also, I’m going to get bored at some point and test some wacky things, like, which TT bike on a seasucker on the top of the car saves the car the most mpg.

Or, which road bike position is fasted when tested at mach 2.

Truly fun software, was only able to grasp it because I had 3D modeling experience and programming experience, and I’m keeping it reletavly simple with a single inlet/outlet external flow sim (I scale the modes to know rim size by the way), slip on walls and ceiling, no slip on ground. At the moment, I’m using pimpleFoam, but I’m playing with different solvers/options.

I would be interested to see a test comparison between Position A, then Position A with a long stick with a spherical ball on the end sticking somewhere out of the rider and far away enough that you suspect it is in the free stream (maybe out of the wheel axle)

If you know the size of the ball then you’d know the rough theoretical CdA difference between the two setups.

Other things would be videoing different sized spheres and comparing the results?

Maybe this is another way to validate (at least the video portion) before heading to the wind tunnel?

Is this something you’ve already looked at? I don’t doubt that openfoam would be good at picking up these differences (e.g. CdA of different spheres) but it might be a good way to validate the video to model building pipeline.

Just want to say you are one resourceful individual. I love those stories of people turning their curiosity into a business. And now you got a pro athlete (Ben) with his own (not huge but very good) YouTube channel interested. Don’t screw this up!

Congratulation on this product hope it does well. I have done a lot of testing over the years and not a lot can be done with my position and most of the low hanging fruit is gone. The question now is, can your product tell the difference in different Skin suit materials or different tire tread, or if one pair of ribbed aero shocks is better than another, etc?

![]()

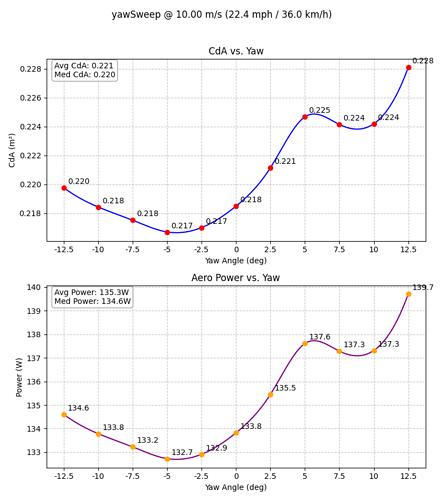

Hi, I’ve been updating this process constantly, now a single test is actually 33, as I’m testing 11 yaw angles simultaneously and 3 wind speeds and produce graphs like these:

That being said, will this system or even true Lidar pick up these subtle material changes and use them to an extent in the test? Not really, could I meticlusly model them and add said textures in post and see a difference, maybe.

As aero concions as anyone else, “aero” tube angles, integrated cockpets, aero head unit mounts, and aero socks, probably contribute something and may be considered “free speed” if they don’t sacrifice breathibility, stability, etc.

At the end of the day, if you’ve optimized your position flawlessly through true Wind Tunnel testing, you’re wearing the latest and greatest “aero” cloths, shaved legs, etc. this service wouldn’t be super beneficial.

However, if you’re persuing any long distance TTs or events, say mixed surface, or otherwise, Wind Tunnels cannot realistically go slower than 40km/h, so, in a sense, since CFD testing is an absolute controlled environment, I can do any precise speed, mimicking exactly an average you may be after.

Additionally, it allows for very cheap and quick assessment of many different things, specific tire width and external rim width, i.e. run 30mm tires on a TT bike that can fit it, but only have 22mm external rim width? 60mm depth/disc wheel? I’ve already modeled many wheels and have those lying around for assessment and can easily answer questions like these, water bottle placement, etc. It’s also trival to try many different air pressures and settings of all kinds.

So, if you’re truly tested all of the position, item placement, etc. at the exact speeds you plan on cycling at in a Wind Tunnel, then, no, unless I manually model the clothing texture (and even then, it’s not really treated like fabric in the CDA software) the .01 watt difference is likely within the margin of error and this product may not be for you.

However, if you think about it creatively, there may be some other specific scenarios that you haven’t “quite” looked at which this would make easy testing for.

Isn’t the opportunity here that this system would be used to do all the low hanging fruit, and then leave the time/money for the actual real world testing / wind tunnel/ velodrome for the ‘1%’ stuff.

Challenge is that the ‘keen’ have already done the low hanging fruit, and so you’re trying to position your offer as the first line to those that haven’t been motivated to ‘care’ up to now. As per this thread, there’s a bias to the people perhaps past the basics being the ones that are easier to engage.

Agree 100% that entry level testing is a real opportunity. The “just do some footage at home, submit and pay $30, voila” is a big draw. That’s a low threshold for professional aero testing if there ever was one.

I’d say if @Eric_Semianczuk can find a way to do 10 different setups for a price to the tune of $100-200 and have the results somehow validated (how? work with a wind tunnel tested PRO athlete perhaps), the business could really take off.

Regardless, I’m in awe of him even trying and will never doubt his strategy, whatever he chooses to do.

Plenty of people (well at least one anyway… me) who either don’t have the time/resources/expertise/location to do proper testing who would jump at this with a bit more data/validation behind it. I want to work out my bottle setup.

I’d be very keen to try it out if I knew how robust the video-to-model bit was.

Either known position, and then add a known sphere etc as I said above and see if the numbers make sense.

Or something like 10 different videos of the same position/athlete to see the CdA spread of the videos.

Like the other poster said - “video at home and voila” - seems like a brilliant plan but only if the model doesn’t vary much from video to video (of the same position/setup)

I actually think video to video variability might be a GOOD thing in some cases as a means to validate how accurately you can get a correct model from multiple videos. Similar to how the typical aero testing session could go (with 3 positions/variables) CCAABBABC, for example, multiple models from videos taken on the same day could be a validation of the possible magnitude of the effect of those changes even if the models end up a bit different.

I’m stoked to see how the wind tunnel results go. I would absolutely pay and get on board if I had any racing priority at all in the next few months, and at ~$30-50 I will absolutely do it in the future.

At an extreme, if there’s a ±0.02 CdA range across say 5 videos of the same position (get a different person to film each one with the same instructions), then this makes looking for small changes much harder.

If the variation is much smaller, then we can pick out smaller differences.

Essentially, I would want to know that the improvement (or lack of) I’m seeing is due to the change I’ve made (e.g. adding BTA bottles), and not just due to different camera work.

“We got 10 different people to film schleppenbike’s position and the CdA of the model was within XXXX” would be a good marketing / validation statement

Pricing and validation, the two best discussions, So, test wise, at this point I’ve rebuilt the aero testing portion of the pipeline to actually perform 33 tests for one video, 11 yaws for 3 different speeds, not quite 10 separate setups for $100 but technically that’s a ton of value for $30 at the moment.

I aim to keep this service as cheap as I can, but there is still a bit of very specialized manual work in 3D (cutting away the trainer, re-adding any wheel parts that may have been cut away, adjusting reconstruction settings if a poor reconstruction was derived, potentially fixing any “holes” or “gaps” in the model), not to mention the process takes an extremly powerful computer (RTX 4090 + Threadripper 7970X + a ton of RAM), running it at 100% for around 40min of processing, sometimes more, per video (my turnaround time is within 24 hours).

The point is, I need far higher quality than the 3D scanning capability of an Iphone, and am basically attempting to mimic the quality of a hand held 3D LIDAR scanner (these cost a lot).

While scaling with cloud instances (not easy as much of this workflow is custom/research based software) can help offset this and refining, say, custom AI agents from scratch for some portions of the “manual work” (extremely challenging) is technically possible, I’m still having a hard time running it, as the creator of said backend pipeline…

So, currently, pricing represents the small number of users, if I start receiving too many orders to handle with myself and my team, I’d up the price while scaling, to keep some, orders coming in, but a reasonable, handleable, amount, dropping the price as I built up a greater team and add more automation and when I can then handle more orders.

Regarding quality, the testing software, OpenFoam, is well regarded and quite scrutinized, the quality of mesh I generate with the process is quite high quality (given all of the processing power and code/research tools behind it, it’s currently some of the best that can be generated, and easily rivals many, lower end, but still very expensive, LIDAR guns).

Our immediate goal is to rubber stamp in a wind tunnel though, as, even though I can say that s much as I want, unless I do have a direct comparison, that’s always going to be a sticking point.

I’m working several angles to achieve this, so, it’s a reasonable possibility I’ll have some accuracy % difference in the near future, however, if we do end up taking a loan or accept investor money to fund this, it will absoluty necessitate a jump in price, as I’d have to pay it back asap/be beholden to an investor (more about profit then).

(If anyone’s going to a Wind Tunnel for some testing, I’d happily fly out to take a video!)

Lastly, on the pricing point again, this can easily show the difference, in a repeatable fashion, with multiple videos between different, even slight, position adjustments, water bottle location impact, disc wheel, aero helmet etc.

So, while it might seem unreasonably pricey for 10 tests to cost $300, those tests can quite easily shave off more watts with knowledge than going from a 60mm deep rear wheel to a full disc, I built this initially to optimize my setups and my buddy’s positions, and only tried to make it a venture when I got most of it automated (originally, a single video to Aero test took several hours of manul work in very challenging programs and quite a lot of custom scripting).

The value proposition here is dispelling the notion you need to buy a very expensive TT bike in favor of AeroBars, aero helmet, cloths, deep wheels, and some position tuning on a road bike, so in theory, it could save you money (it at least kept me from buying some deep aero wheels for my gravel bike, I’m not fast enough for it to really matter it would seem lol).

Great points, First, different people filming, different cameras, etc. don’t actually contribute to particularity different outputs, this is actually the beauty of the field of Photogrammetry, the difference in lighting, quality of camera, etc. can cause holes and voids to appear, but this can actually be fixed quite easily, as, to keep compute resources reasonable, I’m only using around 300 of the sharpest, clearest, frames extracted from the video, I could, up that to 3000 and get a very high quality recreation.

Just as, for every video I’ve taken thus far, the front and back wheel are always the same size as each other, so too are the proportions and such of the human portion of the model.

I additionally, manually scale each one by the wheel/rim size to ensure that it is as accurate as possible.

This being said, … I haven’t tried that, having discussed the idea with some teammembers, we’ll give it a shot in the near future, videoing the same person a bunch of times with different recording people and devices to derive a ±CdA value, thanks for the suggestion.

Are you looking for folks that want to do some testing with you? I’m happy to sign up, and can post results back to others on my experience

I think my position is good now, and would love to optimize as much as possible